What’s Been on the Amherst Mind?

What’s Been on the Amherst Mind?

One of the most critical aspects of being a college student is the ability to make an impact on one’s community and be impacted by that community. However, a difficulty that many students face is the lack of institutional knowledge that results from the relatively short tenure at any university or institution. Every four years, a fourth of students graduate, and go into the real world, taking with them insights, and instituional knowledge that only comes with experience. The result of this high turnover rate is that often, history unnecessarily repeats itself despite the changing times and perspectives. We decided that the only way to map the changes or constants through time was to explore and unpack the remarkable reservoir of Amherst students’ voices for much of the College’s lifespan. As such, for our final blog project, we chose to analyze the corpus of the Amherst Student in order to determine the evolution of sentiment expressed by Amherst College students over the last twenty years.

What is the Amherst Student?

The Amherst Student is the oldest weekly-run college newspaper in the nation. It has been the main free-of-charge news source on campus since 1868, and has produced award-winning journalists who went on to work at organizations such as the Washington Post, New York Times, and Vox. Each week during the term, an all-volunteer team of dedicated Amherst students write, produce, and publish the newspaper which includes articles on arts, sports, news, and opinon.

Like many other newspapers, the twenty-first century has brought a stop to the antiquated financial model of using ad revenue to keep afloat. As a result, in 2017, the Amherst Student shifted from being an independent organization to being funded by the Association of Amherst Students. We identify this as a possible limitation to our analysis prior to looking at the data.

The Amherst Muckrake

The Amherst Muckrake is a comedic faux news publication founded in 1908. Similar to the Amherst Student, the Muckrake is student-volunteer led website that provides commentary on the semesterly happenings of the College. We thought it would be interesting to analyze the sentiment of the Muckrake articles to provide a gentle comparison with that of the Student. That being said, there were some important things to consider before beginning the analysis.

The Muckrake is not as structured as the Student since its prime objective is satire and it’s not a school supported publication with funding. Unlike the Student’s model of weekly content, the Muckrake mainly produces content when the events that occur on campus invite a satirical point of view. Therefore, the quantity of data available to analyze is significantly less than that provided by the Student. The Muckrake is also relatively new, having only been revitalized in 2012 after an extended period of stagnation.For this reason, we narrowed the scope of our more in-depth analysis of the Student to those years that match lifespan of the Muckrake.

Web-Scraping

The Student

There are three websites that house the corpus of the Amherst Student. We scraped the two sites that contained the data that we were interested in: 2000 - 2018. We decided to use Python instead of R for the Student websites due to the fact that object oriented programming was easier to use for the structure of these websites as compared to vector based programming. Moreover, we found that using Python allowed us to use the same code while only making small changes to adjust for different websites. We scraped the more recent website using Scrapy, a crawling framework that helps navigate websites, and Beautiful Soup, a language for parsing html documents, by looping over all of the entries in each category list of articles. For the older site, we used a for-loop for all the articles on the site which we then wrote to json and csv files in order to be able to later import the datasets into RStudio. One of the challenges we faced while scraping these two websites were simply the lack of data such as article titles, authors, or missing links in the older observations.

The following links are for the Amherst Student:

The Muckrake

Scraping the Muckrake was marginally simpler. The website consisted of 97 pages with seven articles on each page. We ran a for-loop over the 97 pages, using the html code assigned to each class (aspect of the article, such as title, date, author, content, etc) as the variables that we then assembled into a table that could be further wrangled in R.

The following link is for the Muckrake:

Basic Facts About the Data

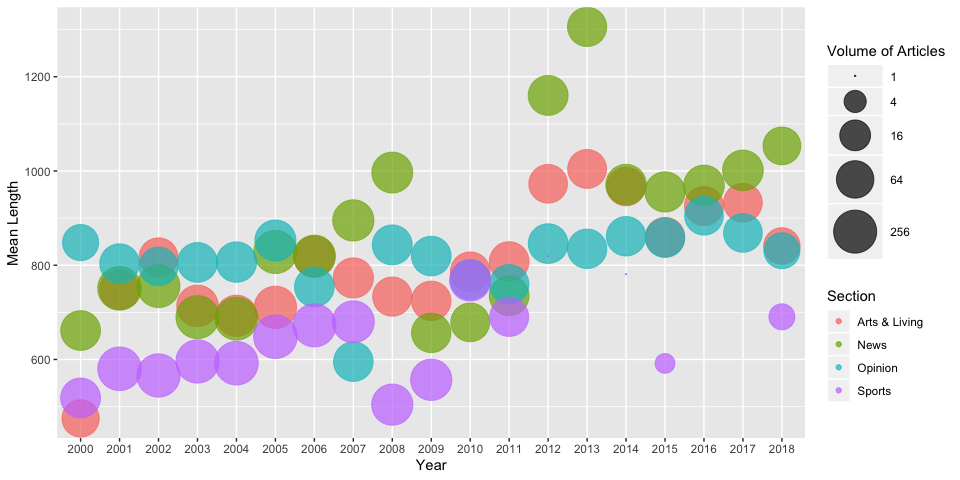

We scraped a total of 9654 articles between the years of 2000-2018, with 2108 articles in the Arts and Living section, 3036 articles in the News section, 1929 articles in the Opinion section, and 2602 articles in the Sports section. News and Opinion articles tend to be longer and of similar length while Sports and Arts and Living articles tend to be shorter.

As is evident in the following plot, the News and Arts sections have been increasing the length of their articles in the recent years while the Opinion section has remained fairly consistent in terms of length. These three sections have also maintained a steady level of output over time. The Sports section, however, has significantly reduced in terms of output volume as well as article length.

Data Wrangling

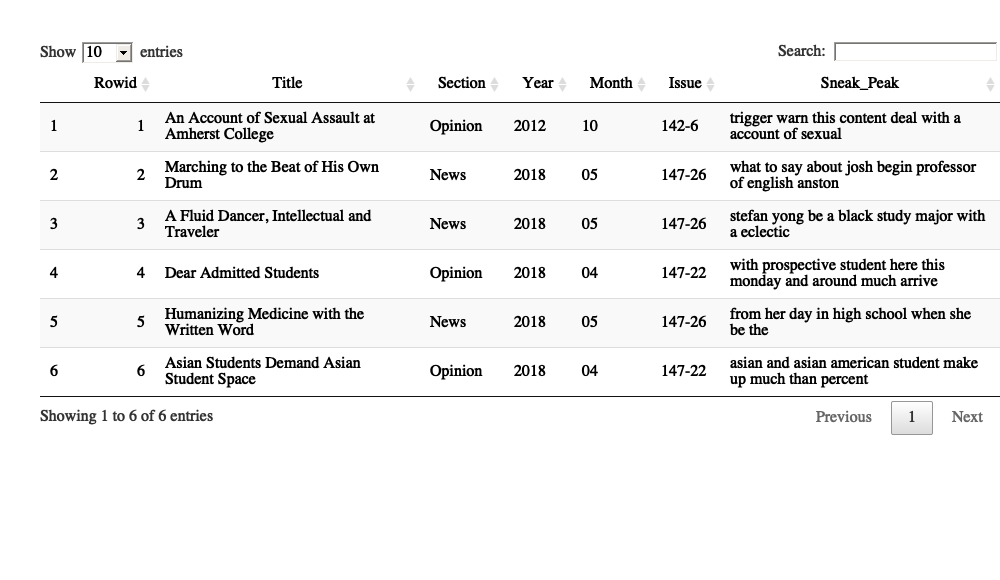

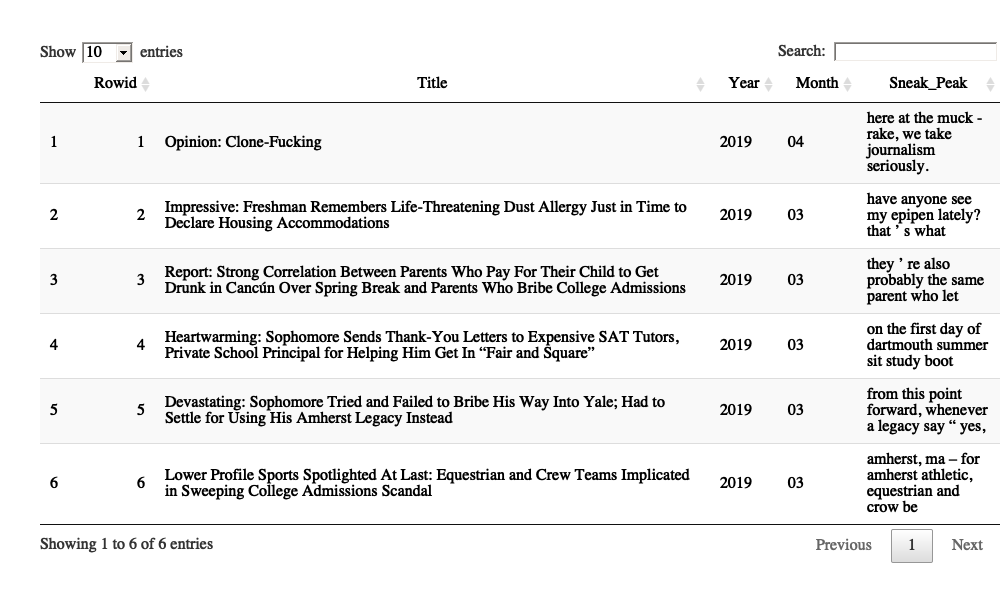

After the web-scraping process was complete, we were left with two semi-clean datasets that required some mild wrangling. First, we created a rowid to be paired with each document so that we could easily identify the document origin of words further along in the analysis. Second, for each dataset, we selected the variables relevant to answering our question. Third, we decided to remove all formatting from the content so as to make the process of analysis more efficient. For example, we stripped all the words down to their root and removed all punctuation and unusual characters. Finally, we also removed the Sports and Arts and Living sections, leaving News and Opinion. We felt that these two sections would better encompass the general Amherst student sentiment at any given time. As a further step to paring down the dataset, we also decided to only analyze the Student during the same years for which the Muckrake had available data. The final product before requesting from the API, was one dataset each for the Student and the Muckrake respectfully. Within the datasets, each observation identified one article and included the information relating to the date, issue number, title, author, and content of that article. The Student dataset included upwards of 2000 observations and the Muckrake dataset included upwards of 650 observations.

The final Student dataset before API:

The final Muckrake dataset before API:

Document Analysis with NLP

Why NLP? What is NLP?

Since we had such an expansive and specific set of documents to work with, all relating to Amherst College affairs, we wanted to find a more comprehensive tool to analyze the corpus than the lexicons of the getsentiments package allowed. After some gentle google searching, we came across a sentiment analysis package called the Natural Language Package (googlenlp) that uses google cloud’s Natural Language API to perform document analysis.

Elements of Interest

We used two aspects of googlenlp’s four available analysis functions: syntax analysis, language analysis, document sentiment analysis and entity analysis. We used the latter two elements. The function for document sentiment analysis considered each article and assigned a score and magnitude value based on the sentiment of the text overall. The score was calculated as the general emotional leaning of the text, ranging from values of negative one to positive one. The magnitude considered the overall strength of emotion within each text, regardless of positive or negative status. Each word’s emotional strength contributed to this number. We decided not to use the magnitude for our analysis as it tended to be greater for longer texts, thus lacking much analytical significance with regard to our overarching goal. The function for entity analysis broke down the document into word and phrase tokens and assigned each token an entity type. We chose to focus on the person, organization, location, and event entities for the Student analysis and the person, organization, and location entities for the Muckrake analysis.

Requesting the API - Application Programming Interface

One of the most time-consuming challenges we faced during this project was requesting from the API. Moreover, we had to be cognizant of the fact that each API request required money and we had a limited budget. We created a for-loop that looped over each observation. Each iteration of this loop returned a new list that included the four elements of analysis for that specific article. We then extracted the two previously identified elements of interest. We had to run this loop four times: once each to extract the entity tables for the Student and the Muckrake as well as once each to extract the document sentiment tables. Within the loop, we created a document ID that identified the document origin for each entity. This would help merge the tables extracted from the API with those that we had already wrangled. We completed the for-loop by binding each table to the next to create one mega table for each of the four loops that contained all the relevant data. In order to minimize the number of requests and conserve our budget, we saved each of the mega tables as a .Rda file that we could then load into any analysis code.

Here is an example of our for-loop code:

for (i in (1:2217)){

# We isolated each article row.

articleRow <- lemma_articles %>%

select(rowid, content) %>%

filter(rowid == i)

# We then ran the request to the API for that article row.

row_entities <- analyze_entities(text_body = articleRow$content)

# We then extracted the element from the list that was returned by the request.

entities <- row_entities$entities %>%

mutate(document = i) # We assigned a document ID.

if (i == 1) {

entities_all <- entities # We established the structure of the desired table.

} else {

entities_all <- rbind(entities_all, entities) # We bound each article table together.

}

}

Post-NLP Wrangling

When we finished requesting the API, we once again selected variables relevant to our analysis. We then summed the number of times each word appeared in the text. Finally, we joined each final dataset from before requesting the API with each mega dataset post requesting the API in order to map each entity and document sentiment to the date of its originating article.

NLP Visualizations

We created nine visualizations including barplots, box plots, word clouds, and faceted bar graphs to help summarize our findings.

Entity Analysis

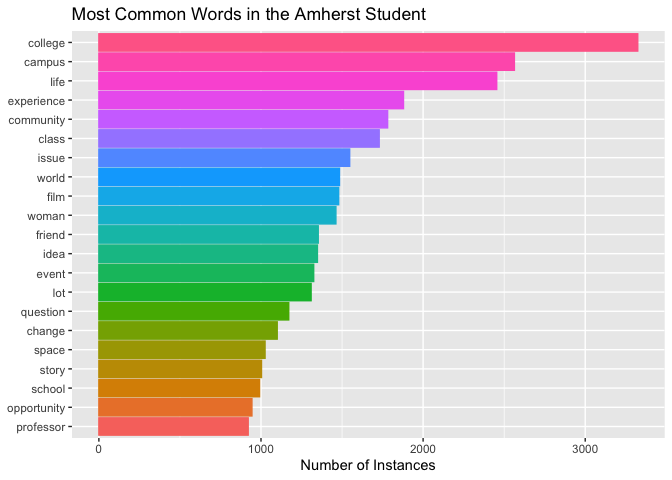

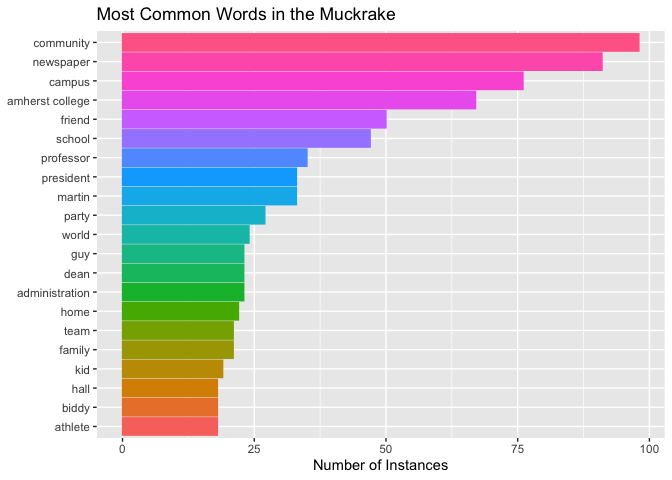

The two word frequency bar plots below show the set of words that are used with the highest frequency in both publications. By viewing these plots consecutively, we see the commonalities as well as the differences between the two mediums.

The Student:

Some words common to the two tables are campus, world, community, friend, and home.

The Muckrake:

A point of interest is that the Muckrake mentions “martin” as well as

“biddy” with relatively high frequency while the Student does not. We

believe this might be related to the fact that the Muckrake tends to

focus on campus-wide “scandals” while the Student reports on a wide

variety of subjects.

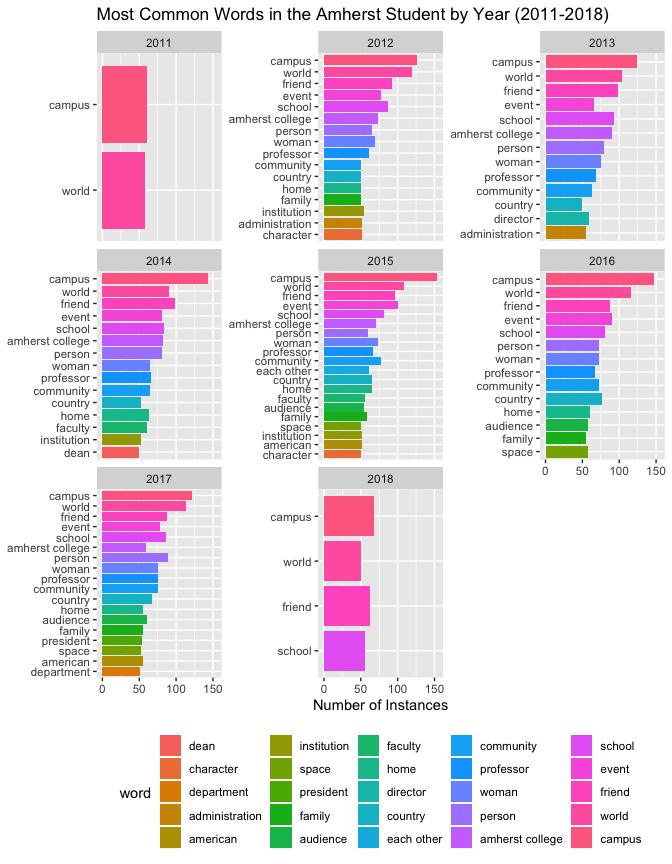

We also created a frequency barplot faceted by year for the Student. This visualization demonstrates the change in the most frequently used terms over time. Some of the common words used in the span of the last eight years are campus, world, friend, women, community, school, and home. Audience, institution, and american have only been frequent in the more recent years.

Document Sentiment Analysis

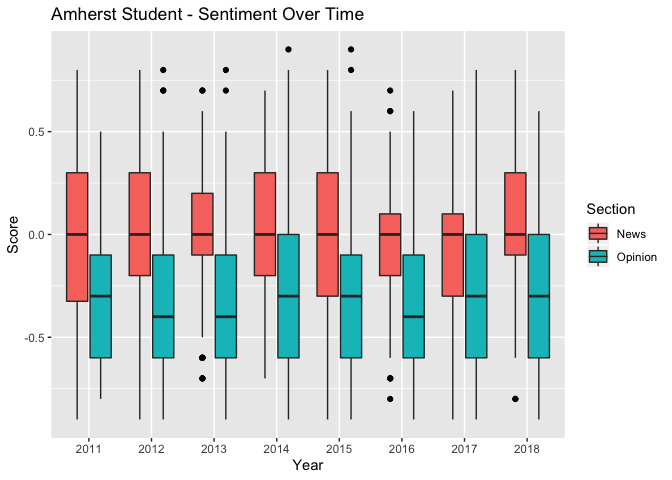

We constructed two boxplots to display the net sentiment of articles by year. The first shows the boxplot for the Student overall while the second shows the net sentiment of articles that mention Biddy.

Overall Sentiment

We were impressed to note that the median score for news articles for the Student have remained neutral throughout the years in question while opinion articles were consistently negative. That means it IS possible for news to be reported neutrally!

Biddy Sentiment

We see a similar trend with the Biddy boxplot. Aside from a few years (2014-2017) the news articles have mainly remained neutral with regard to Biddy while the opinion articles have been consistently negative. The three years that are an exception can be explained by the events and activities surrounding Amherst Uprising.

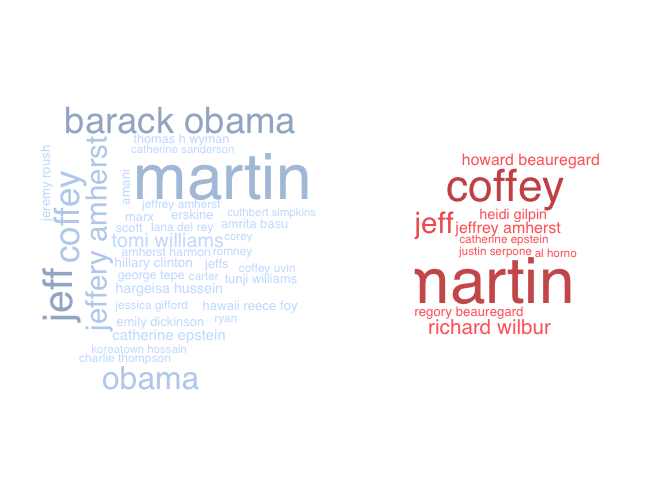

Word Clouds

We developed two world clouds to visualize people frequently mentioned in the Student and the Muckrake. Some names that are common to both are “martin”, “coffey”, and “jeff”. These names align with various events of note in the recent history of the college such as the revision of the party policy and the introduction of a new and improved school mascot. We also discovered that many of the people often mentioned in the Student tend to be administrative staff and athletes.

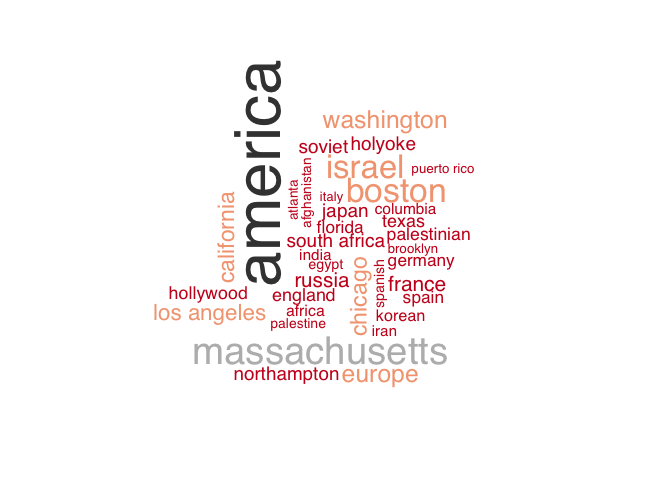

Below is a wordcloud that highlights the frequency of locations mentioned in the Student over the years. Many of the locations frequently mentioned relate to world politics and news. Unlike the name word clouds which were chiefly related to internal campus matters, the location word cloud seems to be more related with external matters.

Evolution of Topics Over Time

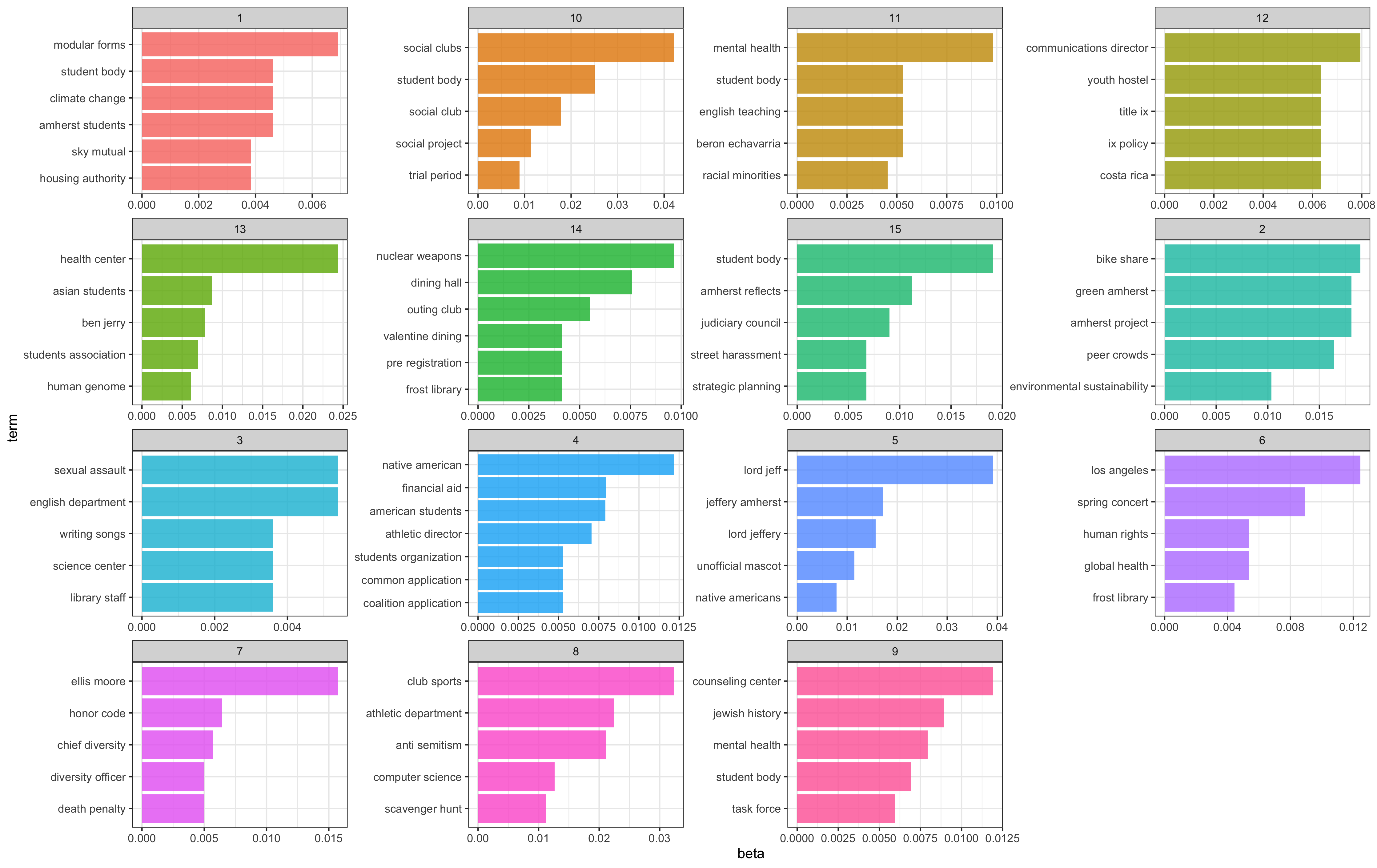

While analyzing our data, we found ourselves overwhelmed with content, and wanted a way to retrieve information centered around the general topics being discussed without having to trudge through the enormous data files. During this process, we learned about Linear Discriminant Analysis is a classification algorithm which allows us to predict categories with an input of k, the amount of categories. By leveraging the package lda, we were able to extract a more comprehensive understanding of the corpus, allowing us to develop more in-depth insights.

The following is an example of the code used to designate the topics:

library(dygraphs)

top_topics_bigram_year <- bigrambyyear %>% # Most frequently used bigrams by year.

filter(year == 2010) %>%

group_by(topic) %>%

top_n(8, beta) %>% # Constructing 8 topics per year with a high beta corresponding to a closer relation.

ungroup() %>%

arrange(topic,beta) %>%

mutate(term = reorder_within(term, beta, topic))

# Visualization of the top bigram topic clusters by year.

ggplot(top_topics_bigram_year, aes(term, beta, fill = topic)) +

geom_bar(alpha = 0.8, stat = "identity", show.legend = FALSE) +

scale_x_reordered() +

facet_wrap(facets = "topic", ncol = 4, scales="free") +

coord_flip()

The topic analysis provided us a view of both internal as well as external changes that occured during the period of interest. We observed some topics and themes related to global politics and events such as the 9/11 attacks, the Haitian Earthquake, and the Great Recession. More interestingly, we were also able to pinpoint some major Amherst changes and events such as Amherst Uprising, the evolution of Val, and the changing of the mascot.

Here is an example of the visualizations we used to determine the topics

surrounding different clusters of words. The higher beta long the x-axis

indicates more relevance of a particular word to that topic. As is

evidenced by this visualization, in 2015, some important topics

discussed relate to Amherst Uprising, mental health, and Lord Jeff.

The following list represents some of the most important topics we discovered in a given year:

2000 - Race relations, internatonal students, dating on campus

2001 - Freshman Housing, Police Reports, terrotists attacks, housing

2002 - Racial profiling, Iraq war, alcohol

2003 - Honor code changes, Ben Liever, Norman Lentz, new college president Anthony Marx

2004 - Study Abroad, admission committee, AAS senate, gay marriage

2005 - President Anthony Marx, finanicial aid, College Republicans, Bird Sanctuary, Academic Dishonesty

2006 - Amherst Police Reports, inancial aid, eating disorders/health

2007 - Virginia Tech, community engagement

2008 - Financial Crisis, blind admissions, elections

2009 - Tenure track, search committe, increase in size of the college

2010 - Hatian Earthquake, sexual awareness, spring concert, mount holyoke collge

2011 - Sexual assault, social life, stress, low-income students

2012 - Emily Dicikinson, sexual violence, title IX, AAS, College budget

2013 - Tutition Assitance, Club Soccer, sexual assault

2014 - Digital Humanties, Drew House, party policy, social clubs, Black Lives Matter

2015 - Amherst Uprising, Resource Centers, Chief Diversity Officer, Lord Jeff, mental health

2016 - Education Studies, birth control, MRC, Amherst Uprising

2017 - Asian American experience, Amherst Uprising, Accesibility, reslife, gun control

Limitations

Just like any natural science project, our data science project has evolved from what we imagined it to be when we began. As we encountered challenges and limitations along the way we were forced to came up with solutions that altered our course and produced unexpected results. The main limitations we came across were time, money, gaps in data, and the shortcomings of sentiment analysis. Firstly, due to the sheer size of the data, as well as the limited amount of time we had to complete this project, we were unable to run all the tests and consider all the aspects of the data that would have made our findings more conclusive. Secondly, as mentioned above in the API section, the limited monetary resource was also an issue. If we had the opporutnity to run the entire dataset throught the API, we might have seen a different trend over time and across sections. Thridly, as with any data that relies on human input, our analysis of Amherst College students’ sentiment over time is solely relying on the input of those people who write for the Student and the Muckrake. We know, as data science students, that this is not necessarily the most comprehensive representation of students’ views on the college. Finally, it is realistically impossible for any data sentiment analysis method to yield perfectly accurate results because a computer is not able to fully understand human emotion. It is important to acknowlege and keep in mind this fact while relying on an API to analyze these data.

Summary and Conclusion

We explored the Amherst Student corpus to determine the evolution of Amherst College students’ sentiment over the last two decades Through the process of analysis, we observed many of the changes we know to have taken place over that timespan within the text. We found that the news articles have remained neutral throughout the years just as the opinion articles have been consistently negative with minimal fluctuations.

Along with the long list topics, both internal and external, that have evolved over time, we also identified some terms that have consistently appeared in the text over the last two decades, such as, community, friend, world, school, and home. The frequent appearance of such terms allow us to conclude that these are all things that Amherst College students feel strongly about. With more time and deeper analysis, we might be able to discern which words among these are associated with positive or negative sentiments. For the time being, this task simply extends beyond the scope of our project.

Future Goals

Since we have identified lack of historical knowledge about an institution as a serious hinderence to a college student’s ability to impact and by impacted by their community, it is our wish to make our findings public and accessible by way of creating an open dataset. We hope, that by providing a clean, wrangled dataset that enables one to see the different issues that Amherst College students have engaged with over time, we will not only make it easier for others to positively impact our community, but that by doing so we will also positively impact our community.

- By Dylan Momplaisir, Trishala Roy, and Eric Sellew